AI voice cloning has emerged as one of the most transformative applications of artificial intelligence. With rapid advancements in deep learning and speech synthesis, it's now possible to clone a human voice with astonishing accuracy using just a few seconds of audio input. At the heart of this innovation lies AI Voice Cloning APIs — cloud-based platforms and tools that developers, businesses, and creators use to generate lifelike speech from text.

These APIs are powering next-gen applications in entertainment, customer service, accessibility, gaming, virtual assistants, and more. From creating voiceovers that sound like real people to reviving historic voices or personalizing user experiences, voice cloning APIs are unlocking new dimensions in synthetic media.

What Are AI Voice Cloning APIs?

Voice Cloning APIs are software interfaces that allow developers to integrate voice synthesis and cloning capabilities into their applications. These APIs use machine learning models — particularly neural text-to-speech (TTS) and generative adversarial networks (GANs) — to replicate the vocal traits, tone, inflection, and rhythm of a target voice.

They typically offer:

- Text-to-Speech (TTS) synthesis

- Speaker cloning or voice imitation

- Multi-speaker and multilingual support

- Real-time streaming or file-based audio generation

How AI Voice Cloning Works

AI voice cloning involves three main steps:

- Voice Data Collection: A few seconds to a few minutes of clean audio are recorded from the target speaker.

- Voice Embedding: A machine learning model analyzes this data to extract vocal characteristics (pitch, cadence, accent).

- Synthesis: When new text is input, the model uses this embedding to generate speech in the cloned voice.

Advanced models like Tacotron, WaveNet, FastSpeech, and VITS are often used under the hood.

Key Use Cases of AI Voice Cloning APIs

Industry | Application |

| Media & Entertainment | Dubbing, voiceovers, audiobooks, podcast production |

| Customer Service | Personalized IVR systems, AI voice bots |

| Gaming | NPC voice generation, dynamic storytelling |

| Healthcare | Voice restoration for patients with speech loss |

| Accessibility | Custom voices for text-to-speech tools |

| Education | Interactive learning materials, multilingual content |

| Marketing | Voice-driven ads, brand voice replication |

Benefits of Using Voice Cloning APIs

- Scalability: Generate thousands of unique audio clips programmatically.

- Cost-Effective: Reduce the need for studio recording and voice actors.

- Consistency: Maintain vocal tone and branding across all platforms.

- Speed: Produce voice content in minutes, not hours or days.

- Personalization: Customize voice experiences for individual users.

Top 10 AI Voice Cloning APIs in 2025

1. ElevenLabs

Best For: Ultra-realistic voice cloning for content creators and developers.

Overview: ElevenLabs is a leading voice AI platform known for its Prime Voice AI, which delivers human-like expressiveness with high fidelity. It supports multi-lingual voice cloning and real-time streaming.

Key Features:

- Clone a voice using just 1 minute of audio

- Speech synthesis with emotional variation

- Real-time streaming API

- Voice Library with pre-trained voices

Use Case: YouTubers, audiobook narrators, game developers

Pros:

- Extremely lifelike voices

- Fast response time

- Web-based and API access

Cons:

- Some limitations in free tier

2. Resemble.ai

Best For: Custom branded voice creation for enterprises.

Overview: Resemble.ai allows users to build synthetic voices with emotional expressiveness. It supports instant voice cloning and emotion tagging.

Key Features:

- Clone voices with just 3–5 minutes of audio

- Real-time voice generation

- API + drag-and-drop editor

- AI-powered voice dubbing

Use Case: Ad tech, localization, enterprise IVRs

Pros:

- Emotion-aware cloning

- Secure, GDPR-compliant

- Multilingual support

Cons:

- Enterprise plans can be expensive

3. Play.ht

Best For: Voiceovers for podcasts, videos, and e-learning.

Overview: Play.ht provides a TTS and voice cloning API with high-quality voices in 100+ languages. It’s widely used by content creators and educators.

Key Features:

- Voice cloning via voice samples

- SSML support for emphasis and pauses

- Downloadable MP3 or WAV output

- Word-level timestamps

Use Case: Podcasters, instructors, marketers

Pros:

- Wide language and accent support

- Word-level audio control

- Easy to integrate API

Cons:

- Voice training requires manual approval

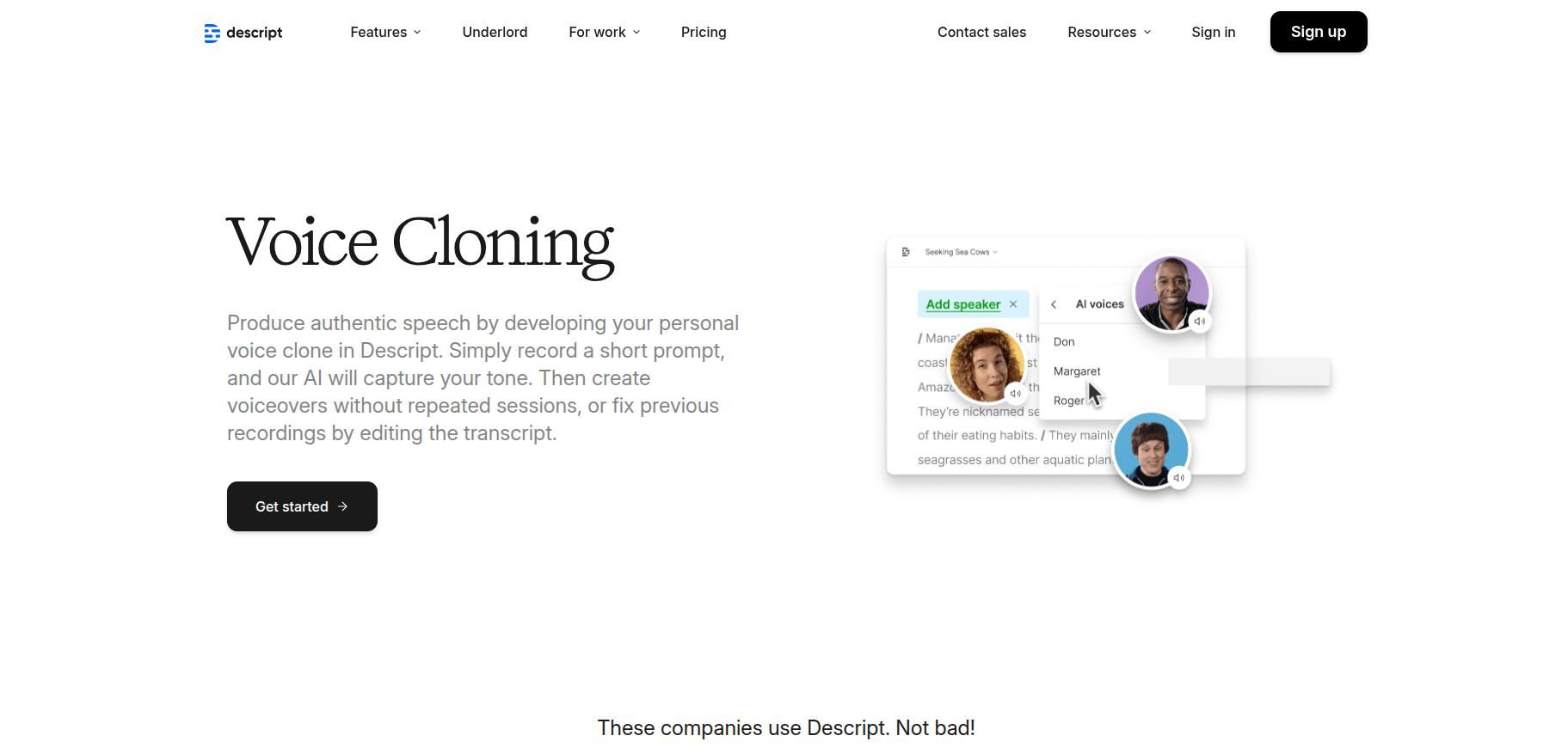

4. Descript Overdub

Best For: Podcasters and content editors using AI audio editing.

Overview: Descript’s Overdub allows users to create a digital version of their own voice, perfect for correcting mistakes in audio without rerecording.

Key Features:

- Ultra-fast voice cloning from text

- Seamless editing in audio timelines

- Speaker labels and transcripts

Use Case: Podcasts, interviews, YouTube videos

Pros:

- User-friendly interface

- Built into Descript editor

- Privacy-first voice cloning

Cons:

- Only available after voice consent and training

5. iSpeech

Best For: Developers looking for robust TTS and voice cloning.

Overview: iSpeech offers scalable APIs for TTS and voice cloning with both cloud and on-premise options. It supports real-time voice generation in multiple languages.

Key Features:

- Custom voice creation with training data

- Real-time synthesis

- Multi-language voices

- Scalable API infrastructure

Use Case: Apps, automotive, customer service bots

Pros:

- Highly customizable

- Used in enterprise systems

- Good multilingual support

Cons:

- Documentation can be limited for new users

6. Coqui

Best For: Open-source voice cloning and local deployment.

Overview: Coqui provides production-ready, open-source voice cloning APIs using TTS and VITS models. It allows developers to train custom voices offline.

Key Features:

- Open-source models (TTS & STT)

- Voice training with datasets

- Real-time synthesis support

Use Case: Researchers, self-hosted apps, privacy-focused projects

Pros:

- 100% local deployment

- No vendor lock-in

- Active developer community

Cons:

- Requires technical expertise to run

7. Microsoft Azure Neural TTS

Best For: Enterprise-grade voice synthesis with reliability.

Overview: Microsoft’s neural TTS service in Azure Cognitive Services provides natural-sounding voices, including custom neural voice models.

Key Features:

- SSML and style-based speech

- Custom voice training with approval

- Global language support

- Real-time streaming API

Use Case: IVR systems, accessibility tools, enterprise apps

Pros:

- Scalable cloud infrastructure

- Strong security and compliance

- Highly customizable

Cons:

- Voice cloning requires manual approval

8. Google Cloud Text-to-Speech

Best For: Developers building apps with Google Cloud infrastructure.

Overview: Google Cloud TTS includes WaveNet-powered voices and allows cloning via its custom voice feature (limited access).

Key Features:

- 220+ voices in 40+ languages

- WaveNet and neural vocoders

- Audio profiles for different devices

Use Case: Mobile apps, virtual assistants, smart devices

Pros:

- Easy integration with Google services

- High-quality neural voices

- Reliable API uptime

Cons:

- Voice cloning access is gated and restricted

9. Speechify API

Best For: Publishers and educators looking to provide voice-based content.

Overview: Speechify started as a TTS tool for dyslexia but has evolved into a voice cloning platform with emotional and realistic AI voices.

Key Features:

- Natural voices optimized for reading

- Emotional variation

- Supports multiple languages and accents

Use Case: eBooks, education platforms, personal productivity

Pros:

- Optimized for clarity and comprehension

- Browser extensions and API

- Ideal for long-form narration

Cons:

- Custom voice cloning not available to all users

10. Voice.ai

Best For: Real-time voice transformation and entertainment.

Overview: Voice.ai offers real-time voice cloning and transformation APIs, popular among streamers, content creators, and gamers.

Key Features:

- Real-time voice morphing

- Clone celebrity or fictional voices

- API for integration in apps and games

Use Case: Live streaming, gaming, avatars, AR/VR

Pros:

- Real-time processing

- Fun, creative use cases

- Voice-changing filters

Cons:

- Less suited for professional content or B2B use

Choosing the Right Voice Cloning API

When selecting a voice cloning API, consider:

Factor | What to Look For |

| Voice Quality | Neural or WaveNet voices, expressiveness, prosody |

| Customization | Ability to train on your voice or brand |

| Speed & Scalability | Real-time response, concurrent requests |

| Security | Consent policies, GDPR compliance, privacy options |

| Ease of Integration | SDKs, RESTful APIs, sample code |

| Pricing | Free tiers, per-character or per-minute billing |

AI Voice Cloning APIs and the Future of Voice Technology

As artificial intelligence pushes deeper into human interaction, AI voice cloning APIs are taking center stage, redefining how we communicate, express, and engage in digital ecosystems. From virtual assistants to immersive gaming and content creation, synthetic voice technology has become a transformative force across industries — especially for developers, creators, and product teams.

The Rise of AI-Driven Voice Experiences Across the Development Landscape

Voice is the next major interface — and developers across every stack and platform are already adapting to this shift. Full Stack Developers, Backend Developers, and Node.js Developers, for instance, are integrating TTS APIs into scalable server-side applications, powering call centers, chatbots, and virtual assistants.

Frontend Developers, JavaScript Developers, and Vue.js Developers are bringing synthetic voice to life through interactive UIs that narrate user flows, deliver notifications, or guide accessibility features. Whether you’re working with React.js, AngularJS, or Next.js, voice APIs can easily plug into SPA (single-page application) frameworks via RESTful endpoints or WebSockets.

For Mobile App Developers, including those focused on iOS, Android, Flutter, Ionic, and React Native, integrating these APIs can enhance user retention and engagement — especially in language learning apps, entertainment, and fitness platforms where voice personalization plays a key role.

Personalized, Programmable Voices for the New Era of Apps

What makes AI voice cloning revolutionary isn’t just how realistic the output is — it’s how programmable, scalable, and customizable these voices have become. This is especially relevant for:

- Python Developers and Django Developers building content pipelines that require dynamic narration.

- PHP, Laravel, and ASP.NET Developers creating multilingual eCommerce platforms with voice-guided navigation.

- Shopify, Magento, and WordPress Developers who can embed voice-based product recommendations and personalized welcome greetings on stores.

- Joomla Developers designing CMS plugins that convert blog content to podcasts in real time.

For Java Developers and those using Spring Boot, voice cloning APIs can be layered into complex backend systems, especially those powering CRM, ERP, and financial tools — providing AI-powered, voice-first interfaces for reports, updates, or alerts.

Beyond Code: Ethics, Ownership & Developer Responsibility

As voice cloning tools get better, faster, and more accessible, it's critical that Software Developers, Open Source Developers, and platform maintainers uphold ethical standards. This includes safeguarding consent when cloning voices, preventing misuse through watermarking, and educating users about the synthetic nature of AI voices.

DevOps Engineers play a key role here — ensuring cloned voices are securely stored, models are audit-tracked, and voice synthesis workflows meet compliance standards (like GDPR or HIPAA). Likewise, SaaS Developers must evaluate data retention policies and use secure APIs when deploying AI voice features at scale.

Powering Conversational Interfaces in Web & App Products

For Web Developers, especially those working with HTML5, TypeScript, or using modern build tools like Vite.js, voice APIs offer new avenues to improve UX. Websites that speak — literally — can boost inclusivity, conversion, and engagement, especially in regions with low literacy.

UI/UX Designers, in collaboration with JavaScript or MEAN/MERN Stack Developers, are now able to create responsive interfaces that talk back, dynamically shifting tone based on context. A portfolio site, for instance, can explain sections in a calm, human-sounding voice. An onboarding screen in a SaaS tool can guide users step-by-step, using a cheerful assistant tone — without needing a video.

These nuanced use cases highlight how important the emotional fidelity of cloned voices has become.

Voice Cloning in Blockchain, eCommerce, and Cloud-Native Apps

The growing Web3 and Blockchain ecosystem has found novel ways to use voice cloning — such as creating NFTs with embedded voice identity, or generating AI anchors for decentralized content platforms. Blockchain Developers are increasingly exploring AI integrations that include both visual and auditory elements to enrich immersive experiences.

AWS Developers, on the other hand, are deploying voice synthesis within cloud-native architectures, using services like Amazon Polly and integrating third-party APIs for real-time voice agents. With scalable Lambda functions and S3 buckets serving as storage and triggers, real-time voice interactions can now be deployed with just a few lines of code.

Similarly, Express.js Developers building microservices can use voice APIs for dynamic content narration, voice surveys, or even security features — like verifying voice patterns for authentication.

Voice for All: Accessibility and Inclusion as a Developer Mandate

For Software Testers, AI voice APIs open new testing vectors. Does the voice match emotional expectations? Is the pronunciation accurate across all supported languages? Are there any latency issues in real-time playback?

Accessibility is also a major priority — and developers working across the spectrum, from Tailwind CSS to WordPress, are now expected to deliver voice-first experiences that go beyond screen readers. Instead of robotic narration, users with visual or motor impairments can now access emotionally rich voice assistants — whether on web, app, or even smart speakers.

Closing the Loop: Developer Roles in the Voice Economy

The future of voice cloning will be built not just by AI researchers, but by you — the developers. Whether you’re building an eCommerce site in Magento, a CRM in Spring Boot, a cross-platform mobile app in Flutter, or an interactive website in Vue.js — voice cloning APIs offer an extra layer of depth, personality, and usability.

And as the ecosystem grows, we'll see:

- iPhone App Developers creating voice-based journaling tools.

- Android Developers launching voice-driven health check-in apps.

- React Native Developers shipping multi-language storytelling platforms for kids.

- ROR Developers deploying customer engagement bots with cloned brand voices.

In the same way that we now recognize UI libraries like Bootstrap or Tailwind CSS as core to good design, voice identity will soon be core to brand and product identity.

Conclusion

The future of voice cloning isn’t just about replication. It’s about expression, inclusivity, scale, and trust. Every developer — from TypeScript coders to Shopify plugin creators — now has the opportunity to create experiences that speak your users’ language, in their preferred voice, tone, and emotion.

As we look ahead, developers must continue to ask:

- Are we cloning voices with informed consent?

- Are our apps respecting data privacy and security?

- Are we using synthetic voices to enhance experiences, not deceive users?

With responsible use, AI voice cloning APIs can become as essential as any authentication library, payment gateway, or UI framework — offering a voice to our platforms, products, and even people who have lost theirs.