Kubernetes has transformed how we build and scale applications(Kubernetes focuses on orchestrating and managing multiple containers at scale across a cluster) — but without proper management, containerized infrastructure can also lead to wasted resources and ballooning cloud bills. Optimizing costs in Kubernetes isn’t just about turning off unused pods—it’s a strategic effort combining visibility, automation, governance, and FinOps practices. We will explore the top tools that make Kubernetes more cost-efficient through monitoring, rightsizing, automation, and policy enforcement.

1. OpenCost — The Foundation for Kubernetes Cost Visibility

OpenCost is an open-source tool that exposes real-time cost metrics directly from your Kubernetes cluster. It breaks down spending by namespace, deployment, or label, helping teams understand exactly where costs originate. Beyond basic reporting, OpenCost integrates seamlessly with Grafana dashboards to visualize trends and anomalies over time. Teams can correlate cost spikes with specific deployments or autoscaling events, enabling faster root-cause analysis and proactive cost governance. This granular insight lays the foundation for any FinOps practice within Kubernetes environments.

Example: A DevOps engineer deploys OpenCost via Helm and connects it to Prometheus. By reviewing per-pod spend, they identify underutilized workloads consuming unnecessary resources and rightsize them — instantly cutting compute costs by 20%.

2. Kubecost — Comprehensive Cost Analysis and Recommendations

Kubecost builds upon OpenCost, adding rich visualizations, forecasting, and actionable insights. It identifies idle workloads, suggests cheaper instance types, and enforces cost-saving rules within CI/CD pipelines. Kubecost also supports multi-tenant cost allocation, making it ideal for teams running shared clusters. It can connect to cloud provider APIs to provide true cost parity between Kubernetes and cloud billing data. With continuous cost alerts and Slack integrations, teams stay informed and in control — without combing through dashboards daily.

Example: A SaaS team integrates Kubecost with their multi-cluster setup. It recommends scaling down unused namespaces at night and switching certain workloads to spot instances — saving thousands monthly without manual audits.

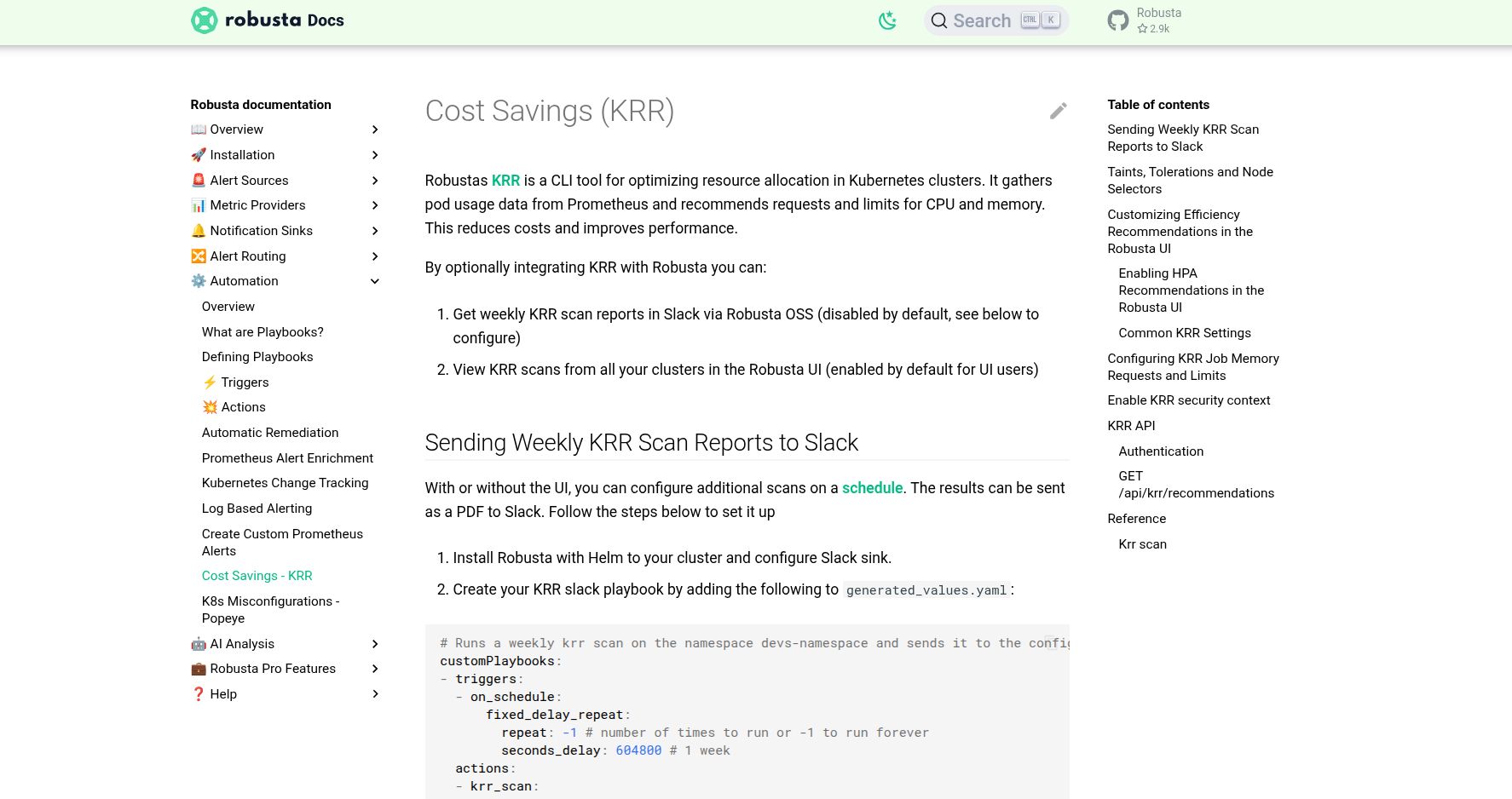

3. Kubernetes Resource Recommender (KRR) — Rightsizing with Metrics

KRR analyzes historical Prometheus metrics to recommend optimal CPU and memory requests for each workload. By eliminating guesswork, it prevents over-allocation and ensures resources match real usage. KRR plays a critical role in continuous optimization workflows. When combined with Horizontal Pod Autoscalers (HPA), it ensures workloads adapt dynamically to demand while maintaining right-sized baseline resources. This blend of static and dynamic optimization helps avoid both performance bottlenecks and budget overruns in production.

Example: A data processing team uses KRR to fine-tune resource requests for high-load jobs. The new recommendations reduce CPU waste by 30%, improving both cost efficiency and cluster stability.

4. OptScale — FinOps and Governance for Multi-Cloud Kubernetes

OptScale helps organizations bring FinOps discipline to their Kubernetes workloads. It identifies unused volumes, abandoned workloads, and untagged resources across environments, offering insights to reclaim spend. OptScale bridges the gap between DevOps and finance by providing unified cost visibility across multi-cloud Kubernetes setups. It supports team-level cost accountability and enforces governance through automated policies. With built-in anomaly detection, OptScale can flag cost spikes before they affect monthly budgets — a must-have feature for enterprise-scale deployments.

Example: A company with AWS EKS and GKE clusters uses OptScale to enforce cost policies — automatically shutting down idle development clusters after work hours and generating budget alerts for high-cost namespaces.

5. Kube-downscaler — Automate Off-Hours Cost Savings

Kube-downscaler automates the process of scaling down workloads when they aren’t needed. It’s ideal for non-production environments or scheduled workloads that only run during specific time windows. Beyond scheduled scaling, Kube-downscaler supports label-based rules, enabling teams to define granular policies for different namespaces or environments. It can be integrated with CI/CD pipelines to align scaling behavior with deployment cycles — ensuring resources are available when needed and minimized when idle, all without human intervention.

Example: A QA team uses Kube-downscaler to suspend staging deployments overnight. When work resumes in the morning, deployments automatically scale back up — cutting non-productive runtime costs by up to 40%.

Conclusion

As Kubernetes becomes the backbone of cloud-native applications, cost governance can’t be an afterthought. Tools like OpenCost, Kubecost, KRR, OptScale, and Kube-downscaler bring transparency, intelligence, and automation into every stage of your deployment lifecycle. By combining visibility with proactive rightsizing and off-hours scaling, DevOps teams can achieve true FinOps maturity — running scalable, efficient, and cost-conscious Kubernetes environments. The key takeaway is that cost optimization in Kubernetes is not a one-time project—it’s a continuous practice powered by visibility, automation, and accountability. Whether you’re managing one cluster or fifty, adopting these tools ensures every CPU cycle and memory unit contributes directly to business value rather than waste.